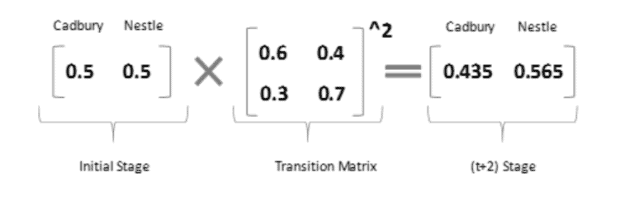

Fuzzy stationary distribution of the Markov chain of Figure 2, computed... | Download Scientific Diagram

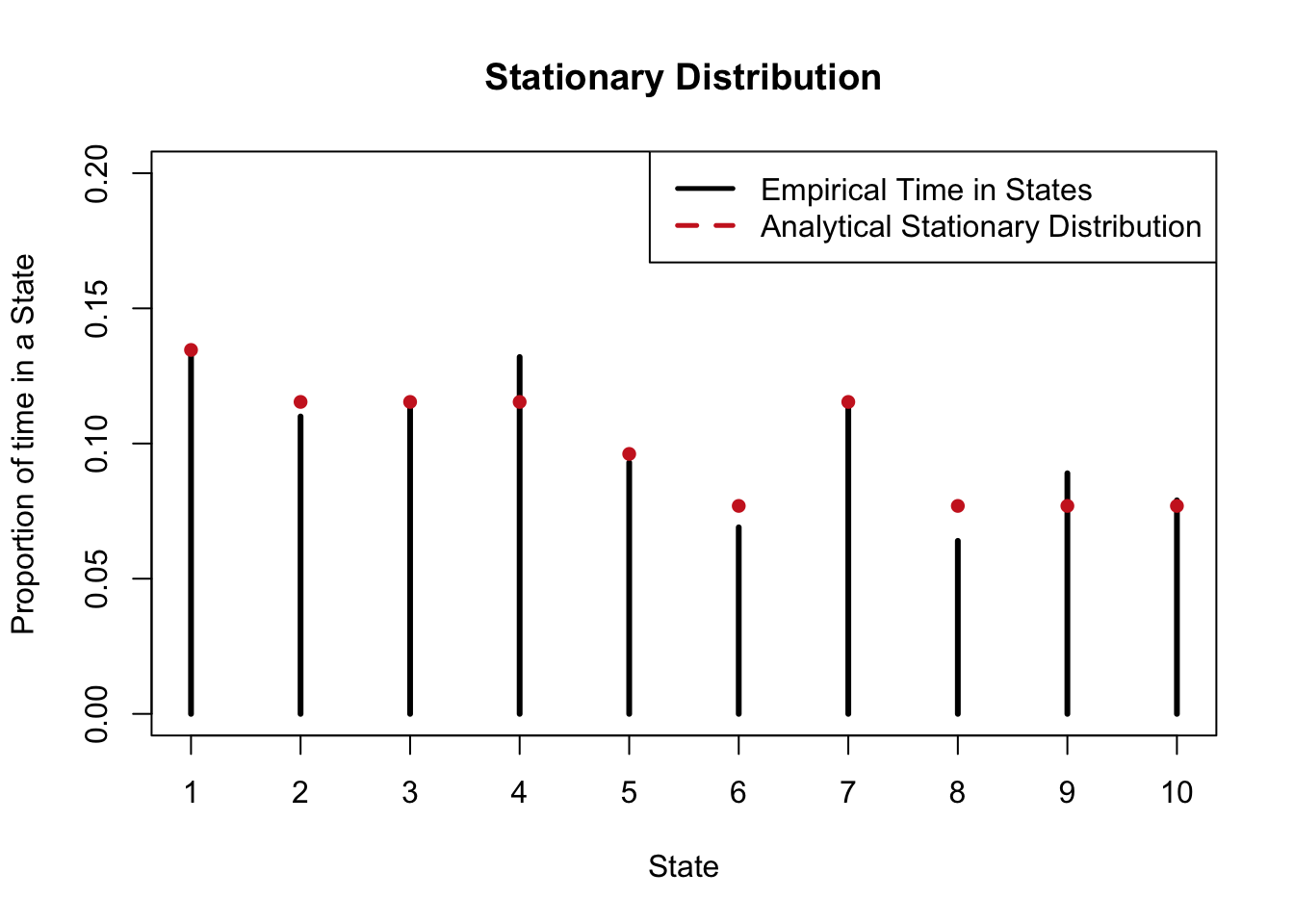

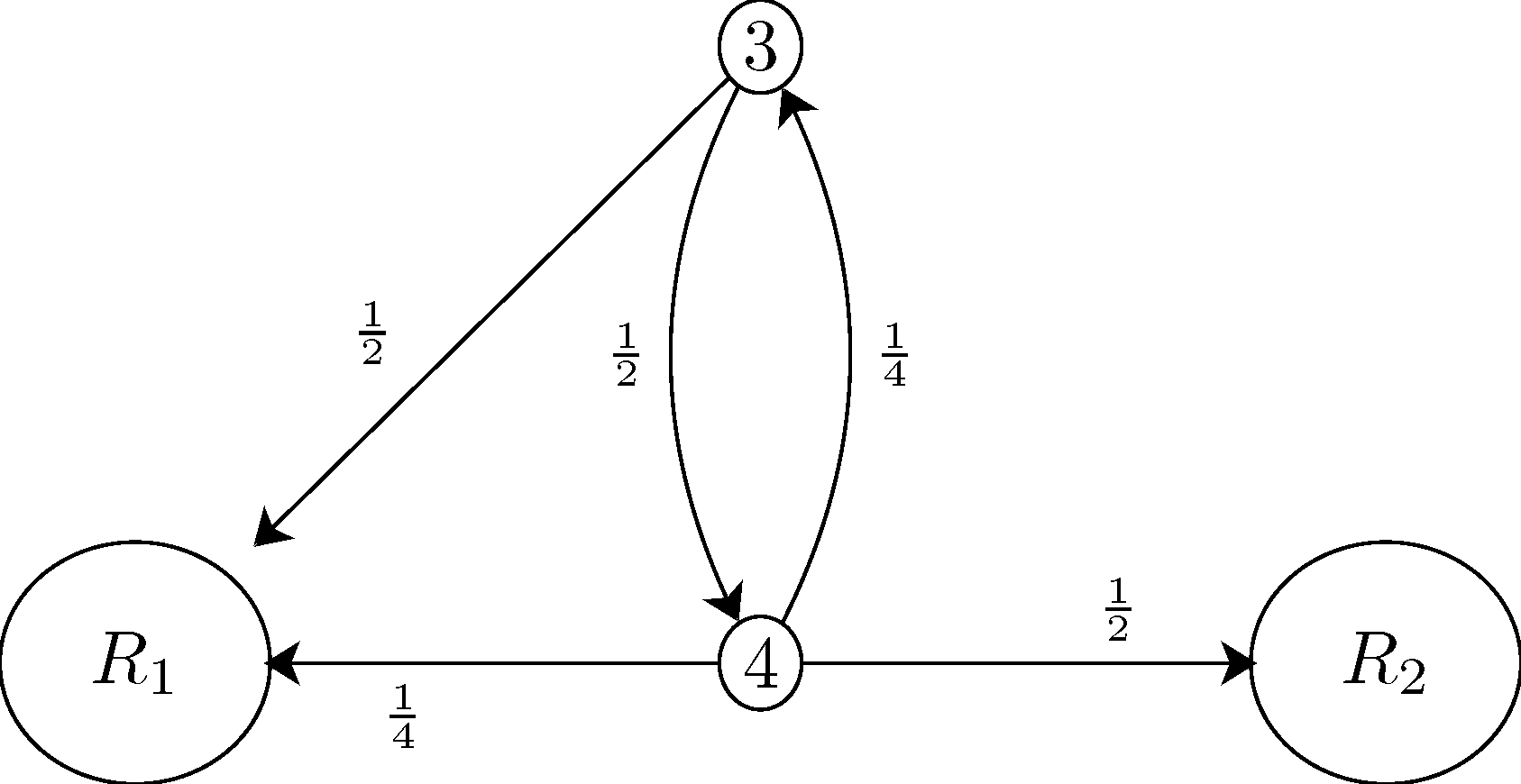

Figure B.1: Stationary distribution of the Markov chain system model.... | Download Scientific Diagram

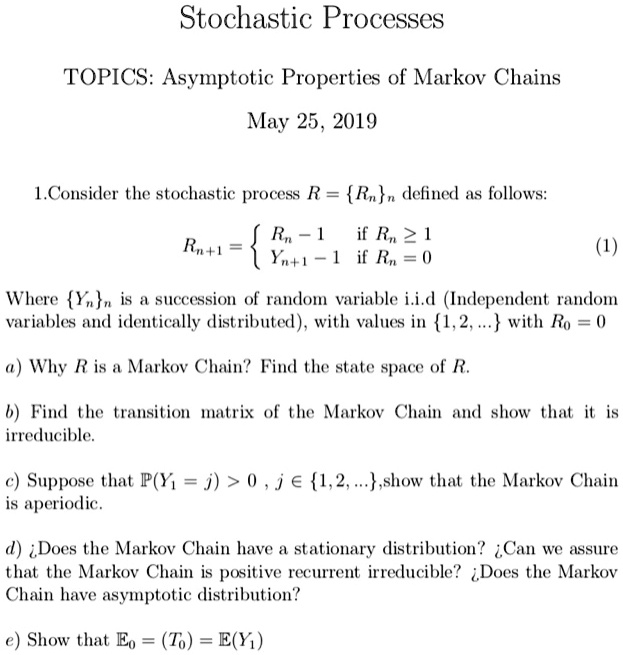

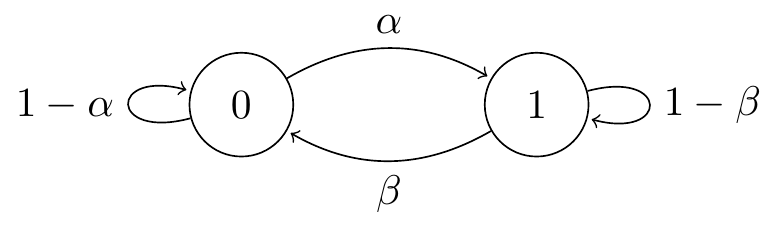

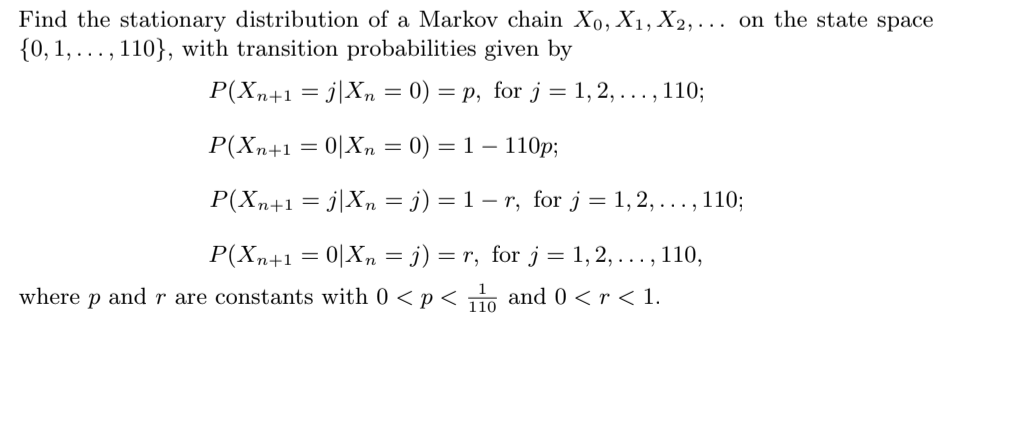

SOLVED: Stochastic Processes TOPICS: Asymptotic Properties of Markov Chains May 25 , 2019 1.Consider the stochastic process R = Rnn delinedl follows: R, - 1 if R, > [ Y - [

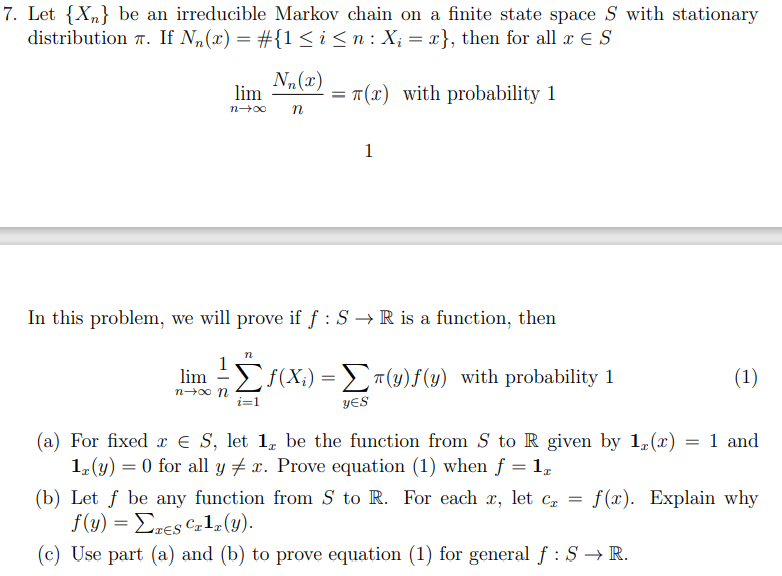

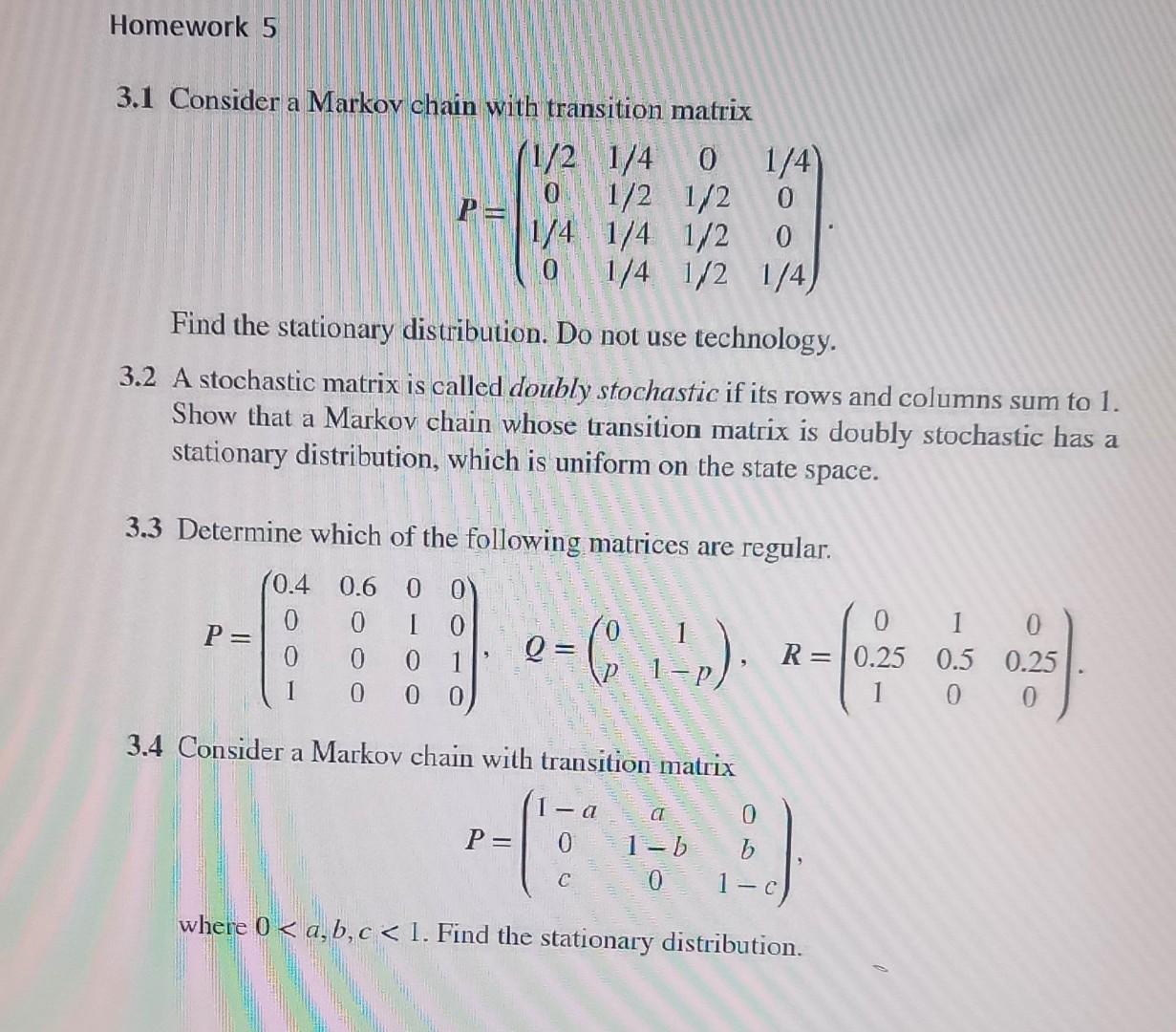

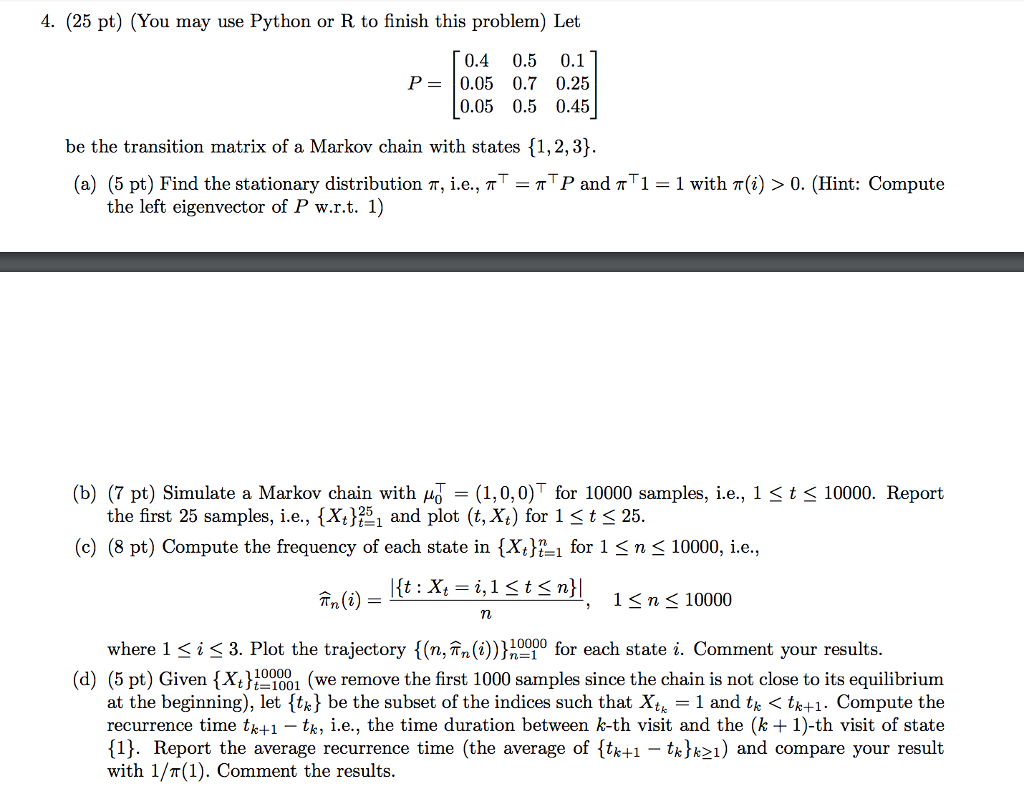

linear algebra - proof of the existence of a stationary distribution in a Markov chain - Mathematics Stack Exchange

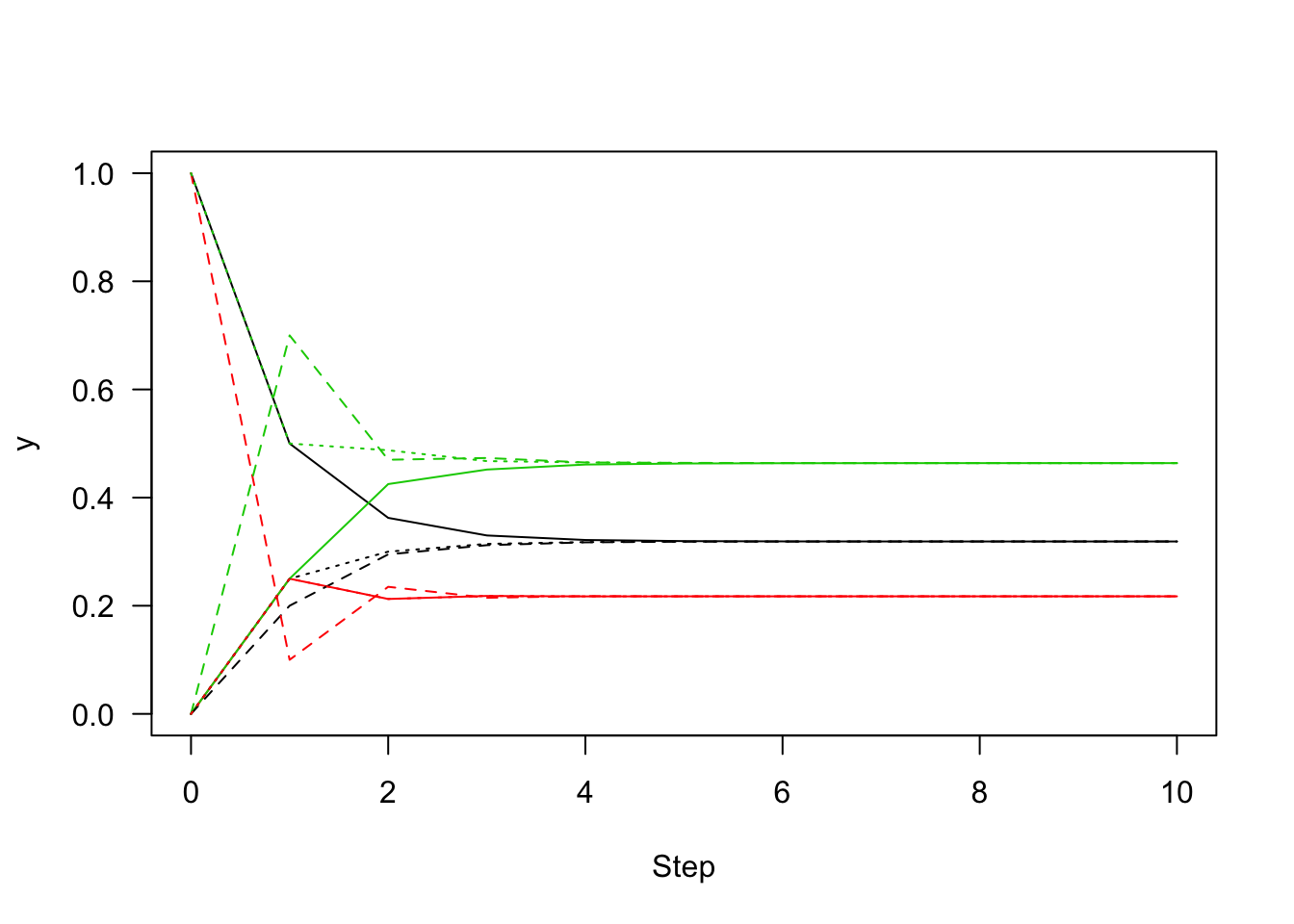

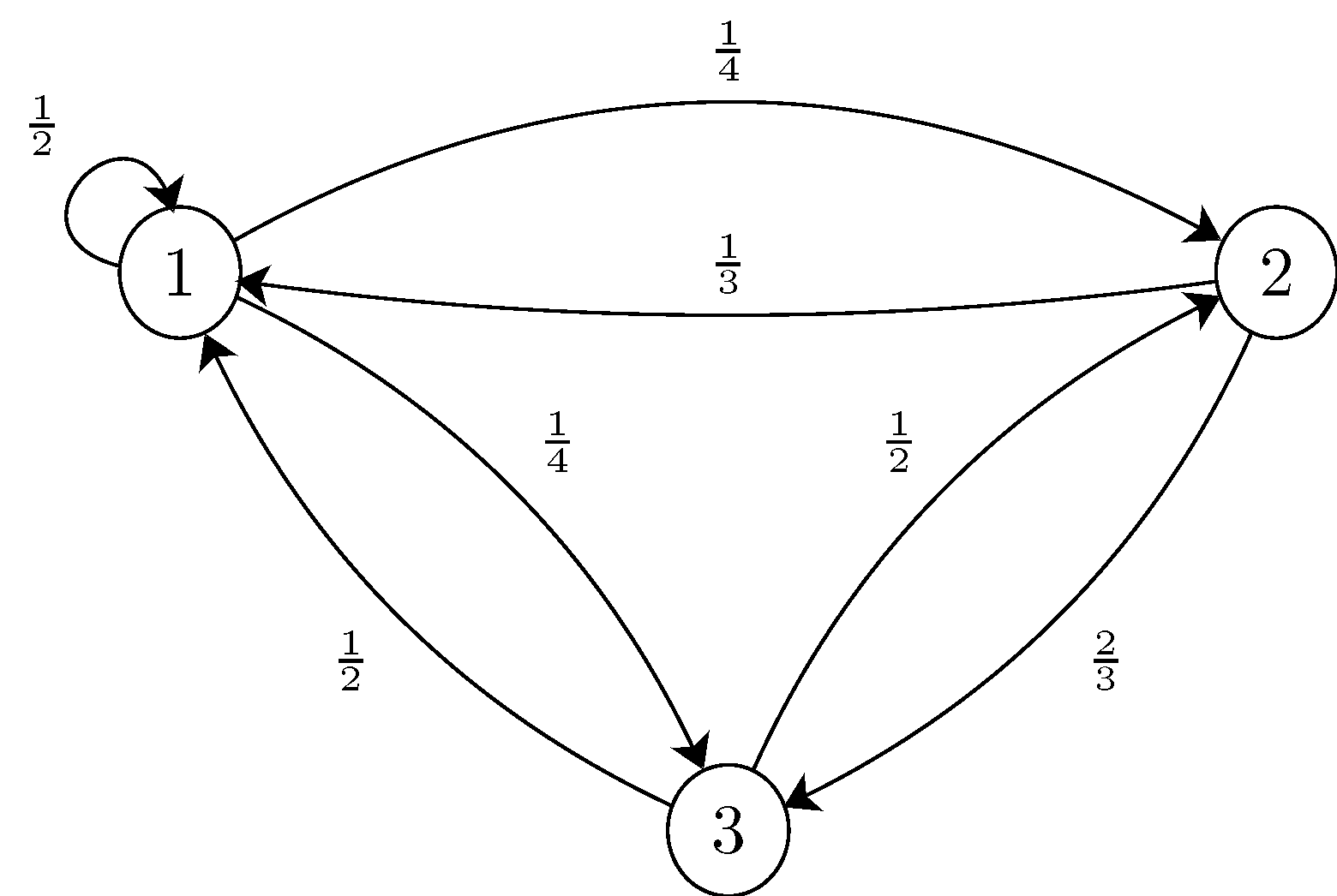

![CS 70] Markov Chains – Finding Stationary Distributions - YouTube CS 70] Markov Chains – Finding Stationary Distributions - YouTube](https://i.ytimg.com/vi/YIHSJR2iJrw/maxresdefault.jpg)