Fast, Terabyte-Scale Recommender Training Made Easy with NVIDIA Merlin Distributed-Embeddings | NVIDIA Technical Blog

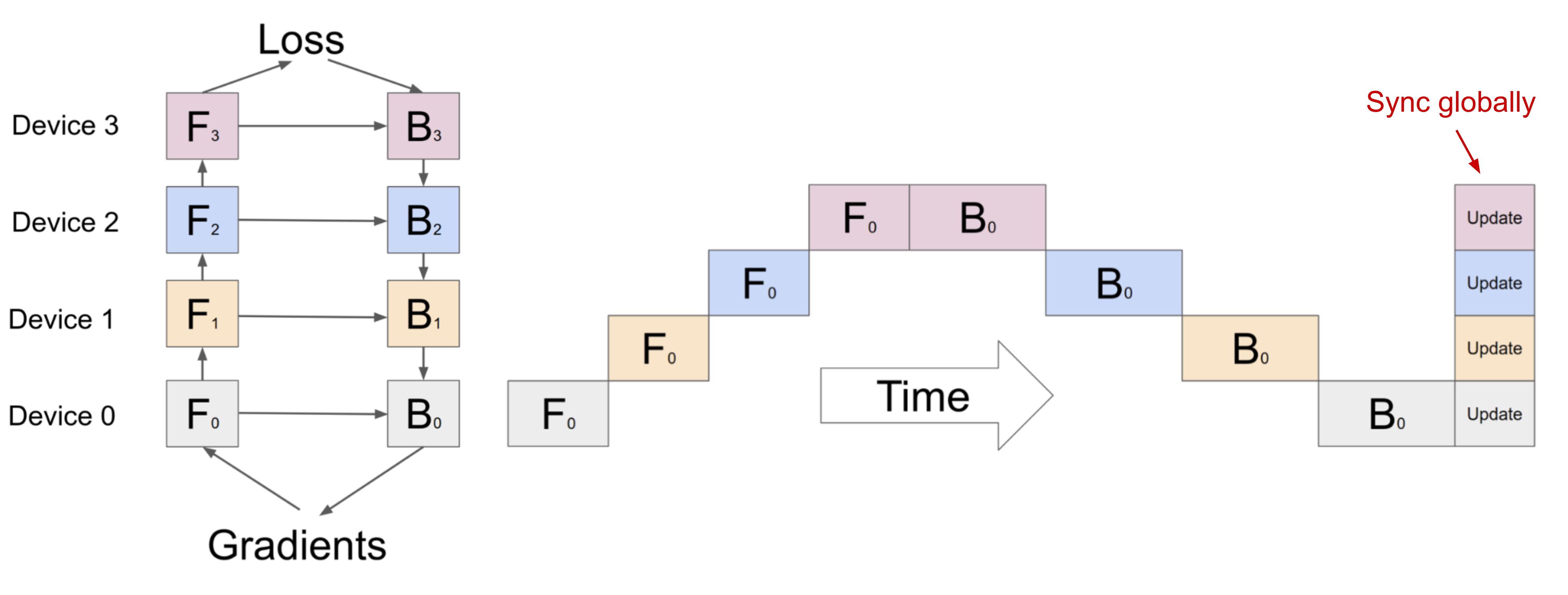

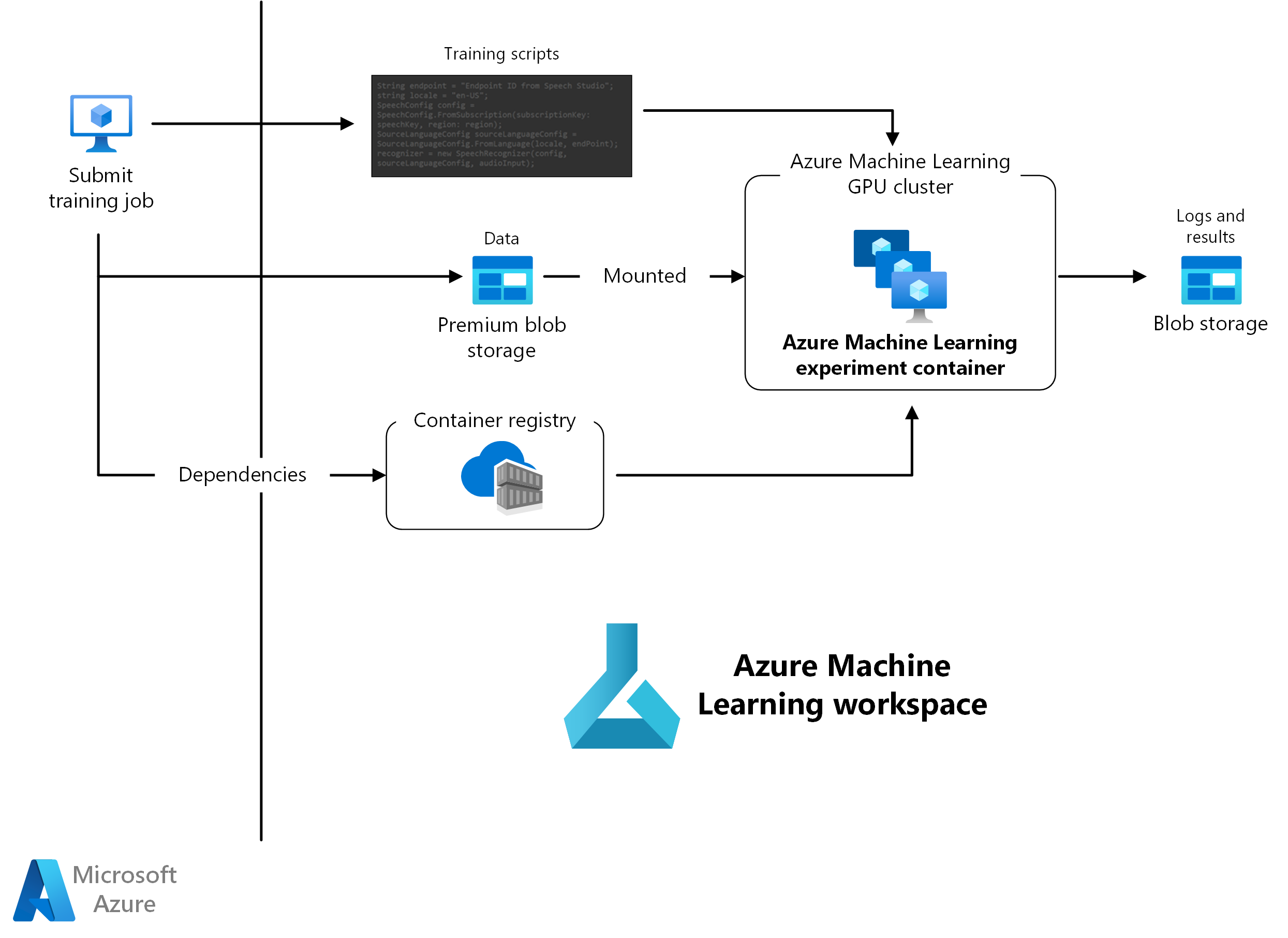

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

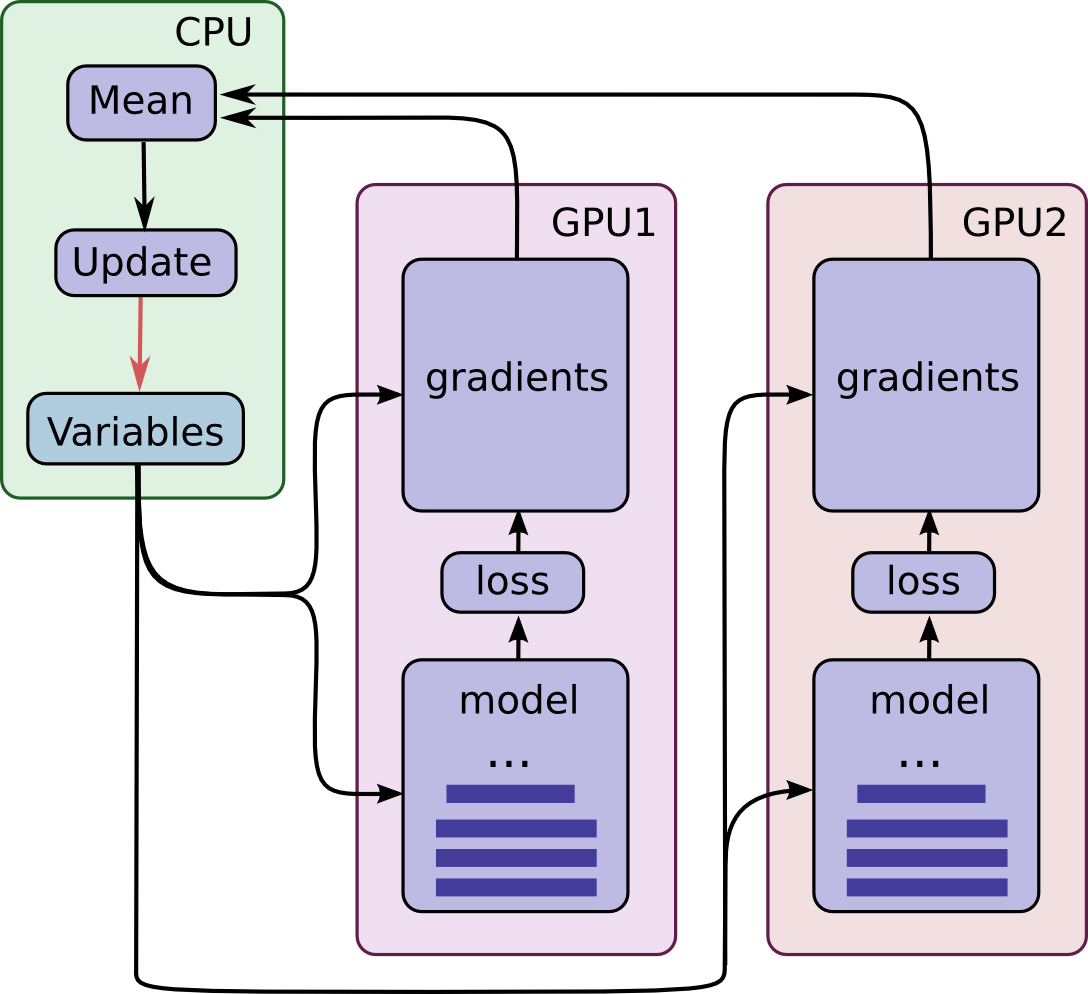

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

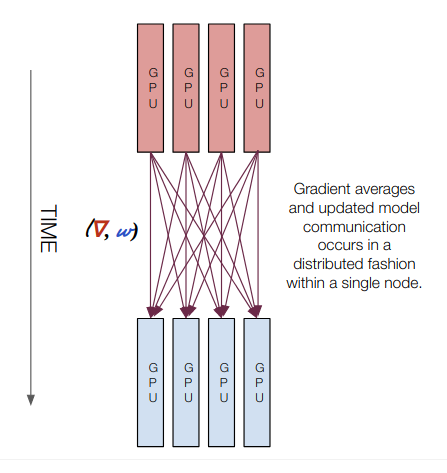

Deep Learning Frameworks for Parallel and Distributed Infrastructures | by Jordi TORRES.AI | Towards Data Science